Method

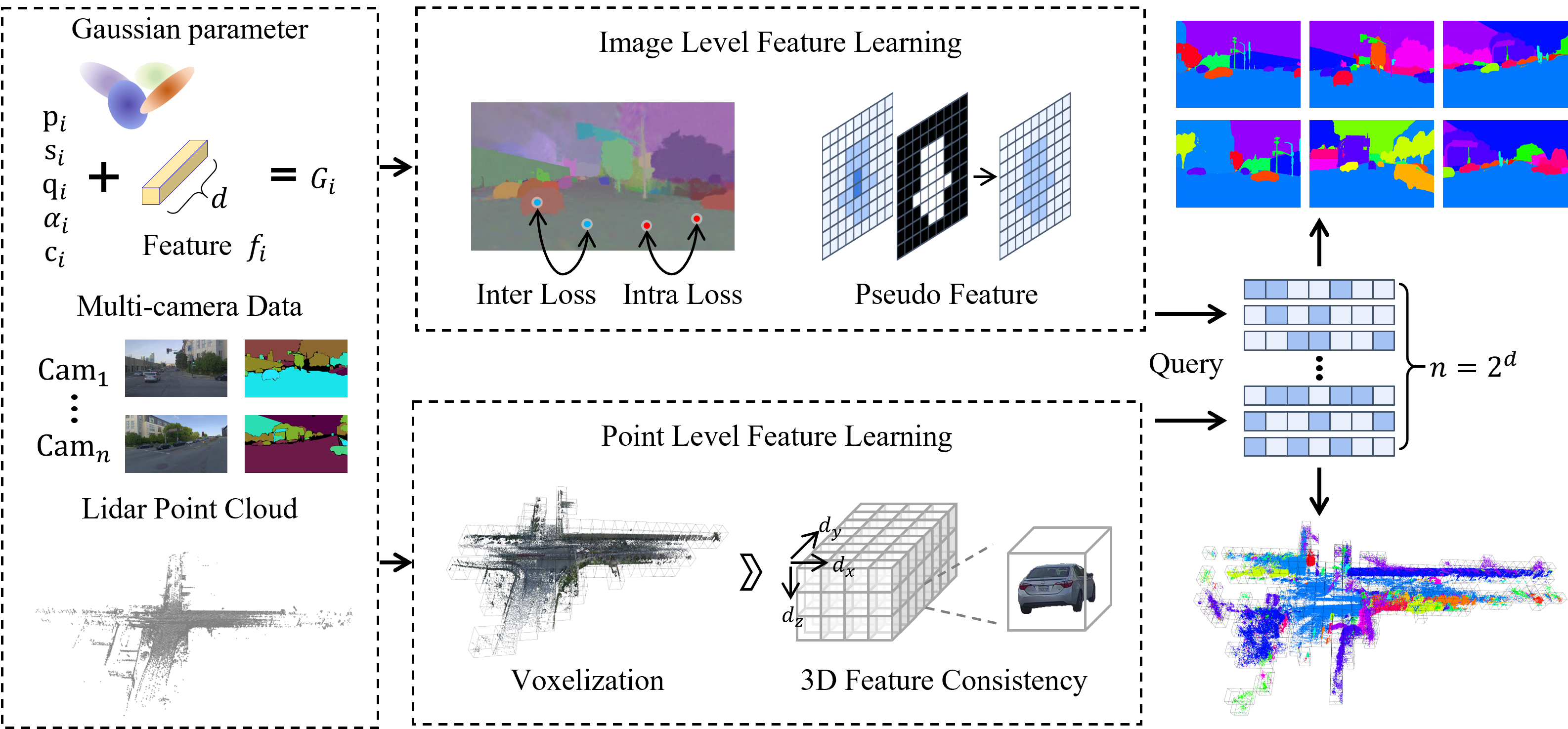

Framework Overview. We extend Gaussian attributes with an instance feature dimension and train the scene using multi-view images and LiDAR points. A contrastive loss and a voting-based pseudo-supervision loss guide 2D feature learning, while a voxel-based consistency loss enforces 3D coherence by aligning nearby Gaussians. Both 2D and 3D features are mapped to discrete instance IDs via a binarized static codebook.

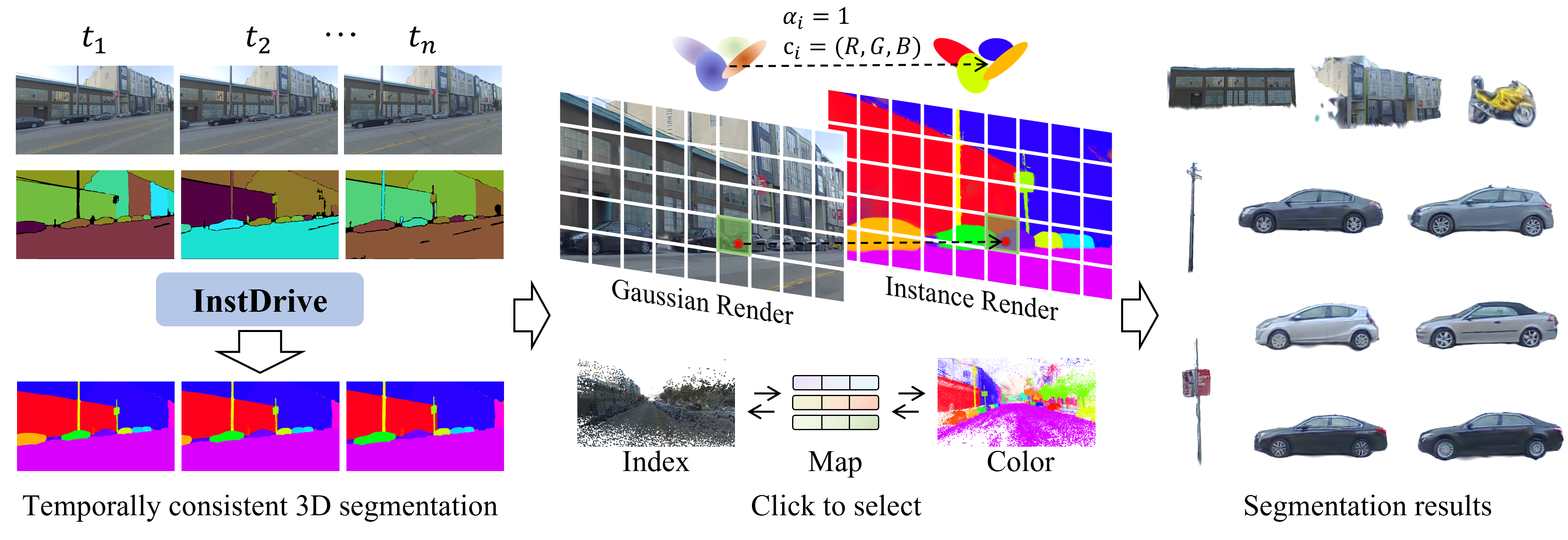

Existing reconstruction methods fail to achieve structured 3D reconstruction with instance-level editability in dynamic driving scenes. To address this, we propose InstDrive, which directly supervises training using SAM-processed video frames without requiring instance matching. We employ a shared 2D–3D color map to enable bijection between instance IDs and colors. During real-time rendering, trained Gaussians are assigned full opacity and colored according to their instance IDs. By capturing click events in the pixel space and retrieving the corresponding color, we map it back to the instance ID using the color map and select all Gaussians associated with that ID, enabling real-time, interactive selection and manipulation of 3D Gaussian instances.